Hi.

Welcome to my blog. I document my adventures in travel, style, and food. Hope you have a nice stay!

Welcome to my blog. I document my adventures in travel, style, and food. Hope you have a nice stay!

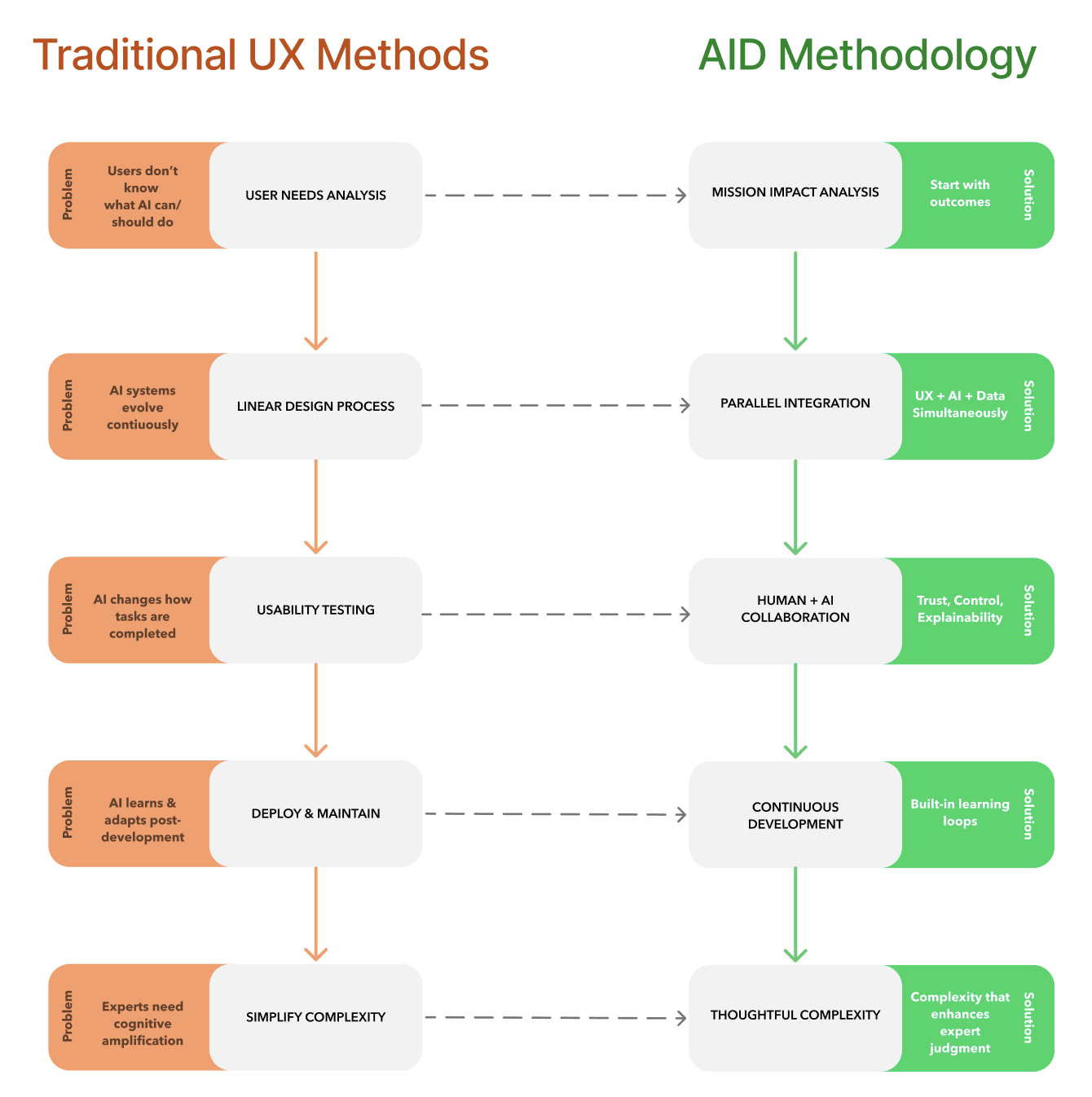

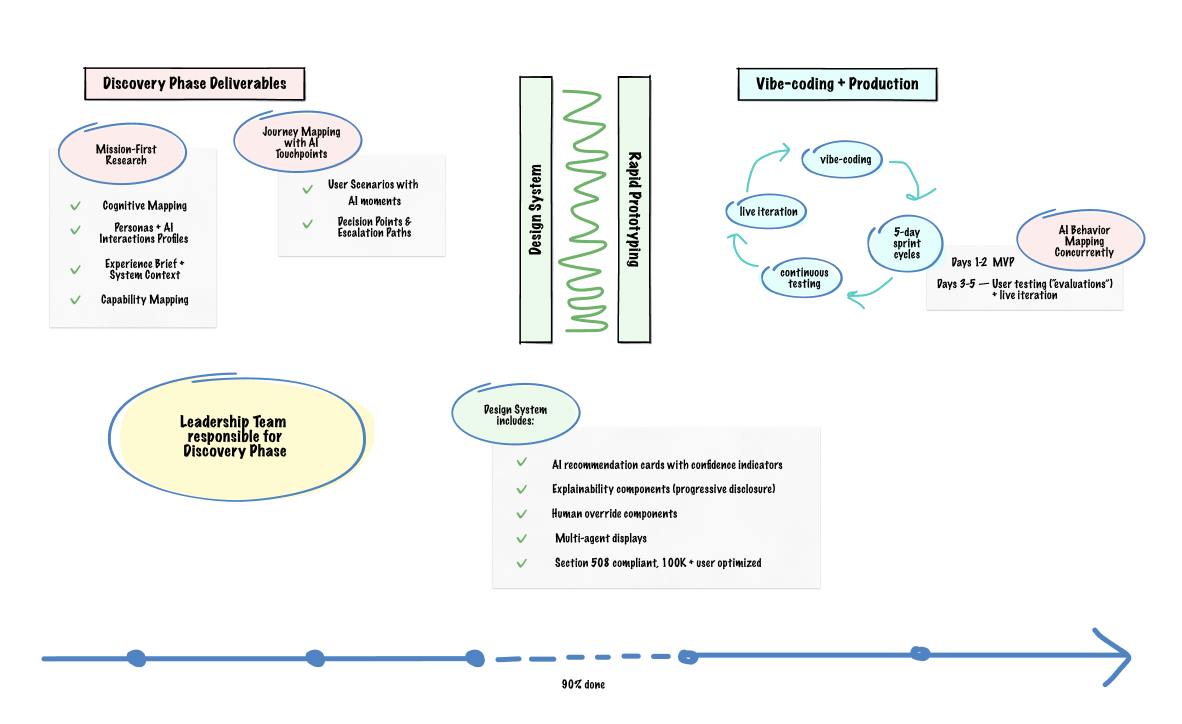

““My approach to AI UX design is grounded in traditional Design Thinking methodology, adapted for the unique challenges of government AI automation””

Traditional UX starts with user needs. CAIDE starts with mission outcomes.

In AI-augmented systems, we must understand not just what users want to do, but what the AI should help them accomplish - and what it absolutely shouldn't.

Why This Matters for AI Systems:

AI recommendations carry weight - bad suggestions can derail expert decision-making

Mission-critical environments demand purposeful AI integration,

not feature creep

User trust builds through consistently valuable AI assistance,

not flashy technology

Traditional development follows the 80/20 rule: 80% building, 20% testing. AI systems demand the inverse. AI fails unpredictably, so we spend 80% of our effort on evaluation.

Our Testing Philosophy:

Phased Deployment: Assistant → Semi-autonomous → Autonomous (only after proven accuracy and trust)

Continuous Validation: Every AI recommendation tested with real users in real scenarios

Human-AI Collaboration Assessment: Beyond "can users complete tasks" to "do users trust, understand, and appropriately rely on AI?"

AI systems aren't monolithic black boxes. They're teams of specialized agents working together. Like any team, they need clear roles, coordination protocols, and human oversight.

Our Orchestration Approach:

Specialized Agents: Data validation, pattern detection, recommendation synthesis - each with defined responsibilities

Human Checkpoints: Clear escalation points where AI defers to human judgment

Transparent Coordination: Users understand which "AI team member" is making each suggestion and why

* This framework is copyrighted by Neale Designs © 2025-2026

“CAIDE Framework” © Neale Designs 2025-2026

AI Partnership: This site demonstrates my approach to AI collaboration—human ideas enhanced by AI capability.

Content and insights: 100% my experience and thinking.

Organization and articulation: Claude AI assistance.

Design mockups: Figma Make.

Imagery: Midjourney.

Learn more about human-AI collaboration in my methodology.